AI Index Report 2024: Hits and Misses

In this article, the author analyzes the latest report from Stanford HAI and shares his perspectives of what future reports should look like

By Nanjunda Pratap Palecanda

Seldom does a day pass when new updates around Artificial Intelligence (AI) fail to grab eyeballs. Media reports usually cover a bevy of events from the launch of a new app or product, to another new startup in the field getting funded or one of the Big Tech companies signing up with a leading AI provider to power their solution.

There are other newsmakers too, such as a big company appointing a Chief AI Officer or some government creating a new position called Minister for AI. Amidst all this noise, the latest AI Index Report (2024) coming from the Stanford Institute for Human-Centered AI (HAI) has every chance of being glossed over.

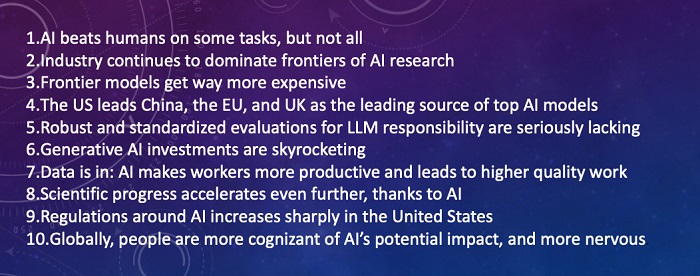

The report (download it here) provides a detailed overview of the latest advances to AI, emerging trends, and resultant challenges in the field. In a nutshell, it delves on ten points that are listed below:

While all of this information is indeed invaluable, there is a need for a deeper understanding to address the multifaceted impact of AI. For example, while the report highlights the increase in AI regulations, it does not provide specific policy recommendations or frameworks that governments and organisations could adopt.

For starters, if one really needs to understand and address the multifaceted impact of AI on the future, this report (and others like it) must include deeper analyses of ethical and social implications, long-term risks, economic impact, public perception and environmental issues. Towards this end, detailed policy recommendations would be welcome.

By broadening the scope of their analysis, the AI Index can offer more comprehensive guidance to policymakers, researchers, and industry leaders navigating the complexities of AI. Incorporating these recommendations would ensure that the AI Index remains a crucial resource for understanding AI’s trajectory and its broader impacts on society.

Having defined what would be the expectations from those directly and indirectly involved in the development and use of AI-led innovations, let’s now review the current report. Here are some key takeaways:

- Generative AI investments: Contrary to popular belief, Gen AI investments actually dropped to $189.2 billion (a 20% drop from 2022) during 2023. Private investments fell marginally, but the most significant downturn was in mergers and acquisitions, falling 31.2%. Of course, this drop has come after a 13-fold hike in AI-related investments. In 2023, venture capital flows dropped for the second successive year, but there was a spike in newly funded Gen AI startups that touched 99 from 56 a year ago and 31 in 2019. It goes without saying that the US cornered a majority of this funding with Indian startups raising barely 2% of that figure.

- Surpassing human ability: AI surpassed human ability across many benchmarks, such as image classification, visual reasoning, and understanding English. But, it trails in complex tasks like competition-level mathematics, visual common sense reasoning and planning. Strong multimodal models such as Gemini and GPT4 represented advancements, but with caveats around certain types of queries. They handled images, text and videos but threw up meme-worthy results too. Use of AI to create more data enhances current capabilities and paves the way for future algorithmic improvements, especially on harder tasks.

- Less Responsible AI: The research also revealed a continued lack of standardization in responsible AI reporting. Political deepfakes affecting elections are not new, but the report says detection methods using AI aren’t accurate across the board. On top of this, projects like CounterCloud show how easily AI can disseminate fake content as the ability to detect them is still very low. Which is why the survey highlights major concerns such as privacy, security, and reliability. There is also the question of copyright as outputs from LLMs could result in violations. There is also a lack of transparency in AI development, specifically around training data and methods. The report notes that since 2013, AI incidents grew 20-fold with ChatGPT showing a bias towards Democrats in the US and the Labour Party in the UK.

- Collaborative and Open: Corporate industry continues to dominate development with 2023 producing 51 notable machine learning models while academia brought out just 15. Another 21 models came from industry-academia collaborations, while 149 foundation models were released – double the number since 2022. Of these, over 65% were open source.

- AI and healthcare: Launch of AI-led applications such as AlphaDev and GNoMe brought higher efficiencies to algorithmic sorting and materials discovery respectively. This resulted in the launch of systems such as EVEscape that enhances pandemic prediction and AlphaMissence that assists mutation classification. Since the introduction of the benchmark in 2019, AI performance on MedQA has tripled. In 2019, the FDA approved 139 AI-related devices – a 12% spike from 2001.

- Rising AI Regulations: The report analyzed legislation containing AI in 128 countries over the past seven years of which 32 enacted at least one AI-related law. The countries passed 1,247 legislations in 2022 which grew to 2,175 in 2023. Till date, 75 national AI strategies have been unveiled including one by India in 2018.

- Public Sentiment: The survey notes a rise in public concern among the people over how AI can impact their lives. While 52% of Americans are more concerned, only 37% feel it would enhance their work. Only 34% anticipate AI will boost the economy, and 32% believe it will enhance the job market, with 59% of Gen Z respondents believing AI will improve entertainment options.

While the report does underscore the rapid pace of AI progress and growing integration into various business operations, it also brings out the challenges that require careful management. Growing investments is resulting in a shift in development leadership that is adding further concern around AI’s impact on jobs.

The need for robust frameworks to guide the responsible development and use of AI technologies requires policymakers, industry leaders, and researchers to work together to ensure that AI technologies are developed and deployed in ways that are ethical, transparent, and beneficial to society.

As mentioned earlier, reports such as this one with the backing of exhaustive research, needs to include more muscle in the future. When it comes to ethical and social implications, the report is not engaged with ethical implications and how AI could exacerbate existing social inequalities or introduce new ethical dilemmas.

Future reports must include a dedicated section on this front covering some of the following points:

- Impact on employment is key as discussed in my book: What on Earth is AI: Exploring AI in the 80/20 Future which lists 20 Industries in which 80% of jobs will be displaced in the next 10 years.

- There is the issue of Bias and Fairness on how AI can perpetuate or reduce biases in decision-making.

- The issue of Digital Divide is another factor that such reports should explore as AI could potentially widen or bridge this gap between socioeconomic groups.

- Real-world examples of AI impact on society already exist and the report could present detailed case studies of such technologies in future editions.

- There isn’t enough mention around long-term risks and future reports could conduct a comprehensive analysis that focuses on existential threats, safety measures etc.

Finally, the report’s value would grow manifold if it were not just to state researched facts but also make recommendations. For example, AI regulations have spiked but what frameworks should governments adopt around benchmarks, regulations and global collaboration to name a few.

Last, but not the least, the report just brushes past the economic implications around AI adoption without discussing the costs involved. Training state-of-the-art AI models has become extraordinarily expensive. OpenAI’s GPT-4 cost $78 million to train, Google’s Gemini Ultra $191 million.

Maybe, Stanford could look at making sector-specific analyses on AI impact, discuss predictive economic models, and demystify job market dynamics. All of this would go a long way in enhancing public perception and trust that includes initiatives to build transparency, higher public engagement, educational campaigns and overall ecological impact.

(For more details, you can contact the author via his LinkedIn profile)