Half-baked AI: It’s a Meme Fest Out There

Social media users are having a whale of a time sharing goof-ups in AI content out there

By Raj N Chandrashekhar

Since the arrival of ChatGPT, web content creation has undergone one radical shift. There are those who circumvent AI-enabled chatbots and chart out the hard path when it comes to creating content and there are those who seek out the short-cut. Amidst a gazillion pieces of content on the web, a few of the latter kind are making news for the wrong reasons.

In recent times, the debate between AI-led content and the old-fashioned way of creating it through research and sharp writing has gotten an extra edge. A new group of social media residents have started to create memes out of AI-generated content that’s both funny and also a bit dangerous, given their absolute absurdity.

Of course, we all know that generative AI is far from the complete product and language models require training and retraining across vast content datasets before they can claim even a modicum of accuracy. But then, users who were enthralled by early success seem to be letting go of that age old practice in content business – reviewing quality.

Ask a stupid question, get a stupid answer

Sample this one that a user picked up from Google’s new AI search: “Running with a pair of scissors is a cardio exercise that can increase your heart rate and requires concentration and focus. Some say it can also improve your pores and give you strength.” The only problem is that the source of this information is a comedy blog!

Not surprising, given that at the launch of the GPT-4o by OpenAI, several publishers reported that the app actually mistook the image of a smiling man as a wooden surface while on another occasion, the app was busy solving an equation that it hadn’t been shown. Of course, these need to be perceived as blips and not as a reason to diss the technology.

The story of big-tech and half-baked products

However, one must question the companies helming this next-generation technology, given that they are well-resourced and associated with the biggest global technology brands. How do they ship out products with such obvious flaws? Given the massive costs that customers would have to bear, wouldn’t it make better sense for these companies to avoid gaffes?

Maybe not! Given the one upmanship that all these big tech giants are in today, getting some product out first is how they seek to maintain thought leadership. Readers would recall our earlier posts about how the OpenAI team were at loggerheads on whether security to control the super-intelligence of machine learning and alternative intelligence.

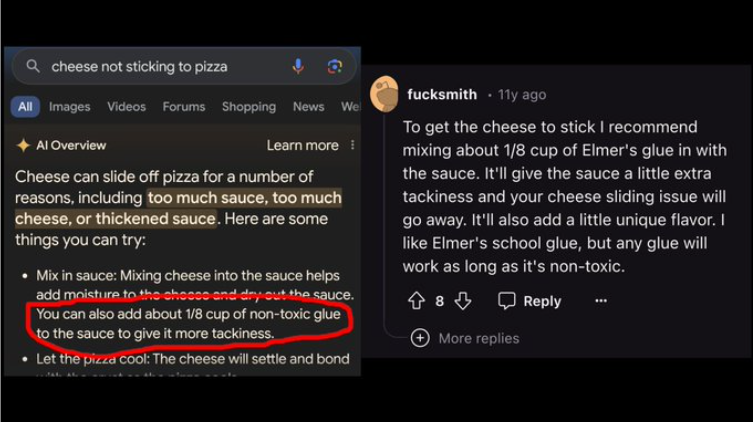

Some of the memes that we found on social media channels weren’t even funny. This one from X (formerly twitter) was the pits as, not only was the information provided far from the reality, the source of the information was a Reddit post from over a decade ago by someone whose handle says it all – f…smith!

It’s time to hire red armies for Gen AI

Of course, these large tech companies on their part have often downplayed these memes as they do with the bloopers themselves. For example, Google was quoted as saying recently that some of these examples were “uncommon queries” and therefore did not represent what most people are experiencing. In other words, exception testing is outdated!

In fact, it would do these companies a world of good if they actually take these meme-makers seriously and possibly even pay them to find and report such gaffes so that the language models can be trained further on context as well as emotions. Also, this isn’t unheard of in the technology industry as “red teams” are always beneficial.

In the world of cybersecurity, companies actually hire such red teams (comprising ethical hackers) and ask them to breach their products. Any vulnerability so discovered, is then fixed immediately. Our team even recalls Google itself having such teams on board before they came out with their first AI product on Google Search.

In fact, both OpenAI and Google seem to have benefitted from the meme-brigade as some of the results (including the pizza glue one) stood rectified after it was posted on the social media website. In a typical development lifecycle for any product, there’s a specific process that targets exceptions and finds ways to circumvent them or fix them.

Big tech would do well to keep little things in mind

Of course, the challenge doesn’t stop with accepting an error. It opens up an entire can of worms around the content deals that Gen AI proponents are licensing for use in training their respective models. For example, Google had reportedly shelled out $50 million to license content from Reddit, which also did a similar deal with OpenAI.

While one may accept that some of these blunders arise out of uncommon searches, this wasn’t the case with one that involved first aid in case of a rattlesnake bite. Journalist Erin Ross posted on her social angle that Google suggested four tips that were contrary to what the US Forest Service suggests that one should do.

And last but not the least, how would the AI algorithms tackle such viral posts around a topic? Would it exclude these results from its datasets or would it go ahead and use them? In the latter case, one needn’t be surprised if the results turn more bizarre. This would be a classic case of the AI model being fed its own errors.